Welcome to this tutorial on the state of machine learning complexities in 2023. Over the years, machine learning has evolved significantly, leading to both new opportunities and challenges. In this tutorial, we will explore the complexities that researchers, practitioners, and enthusiasts face in the current landscape of machine learning.

Table of Contents

- Introduction

- Data Complexity

- Data Volume

- Data Variety

- Data Velocity

- Model Complexity

- Deep Learning Architectures

- Transformer Models

- Continual Learning

- Ethical and Social Complexities

- Bias and Fairness

- Explainability and Interpretability

- AI for Good

- Hardware and Resource Complexities

- Hardware Acceleration

- Energy Efficiency

- Conclusion

1. Introduction

In 2023, machine learning has expanded its reach across various domains, from healthcare to finance, and from autonomous vehicles to natural language processing. However, with this growth comes a set of new complexities that researchers and practitioners must address. Let’s delve into these complexities in detail.

2. Data Complexity

Data Volume

One of the most significant challenges is handling massive volumes of data. The rise of IoT devices, sensors, and digital platforms has led to an exponential increase in data generation. Machine learning models need to be scalable and efficient to process and learn from these vast datasets.

Data Variety

Data is no longer limited to structured information. Unstructured and semi-structured data such as images, audio, and text play a crucial role in many applications. Incorporating diverse data types into models requires new approaches to feature extraction, representation, and fusion.

Data Velocity

Real-time or near-real-time decision-making is now a requirement in various applications like fraud detection and recommendation systems. This demands models that can process and adapt to data streams with high velocity, ensuring accurate and up-to-date predictions.

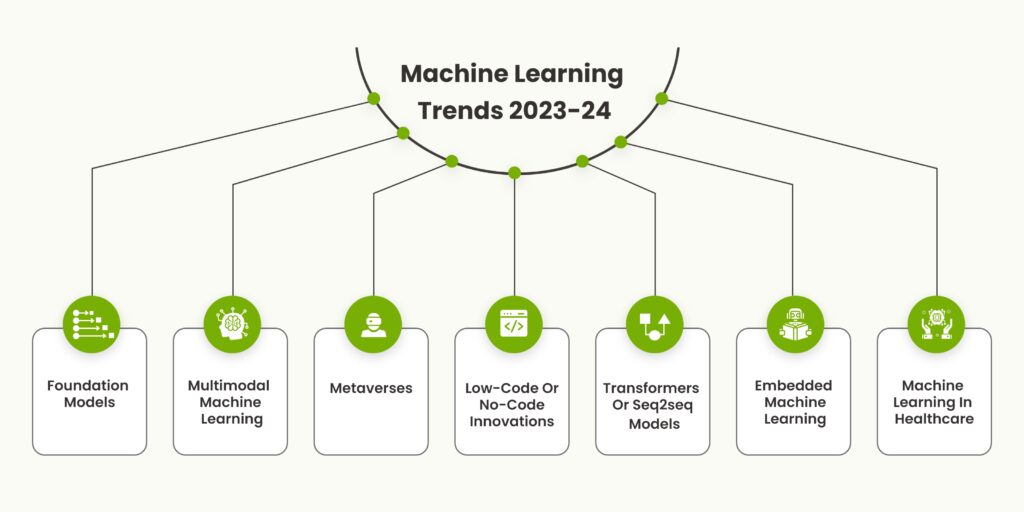

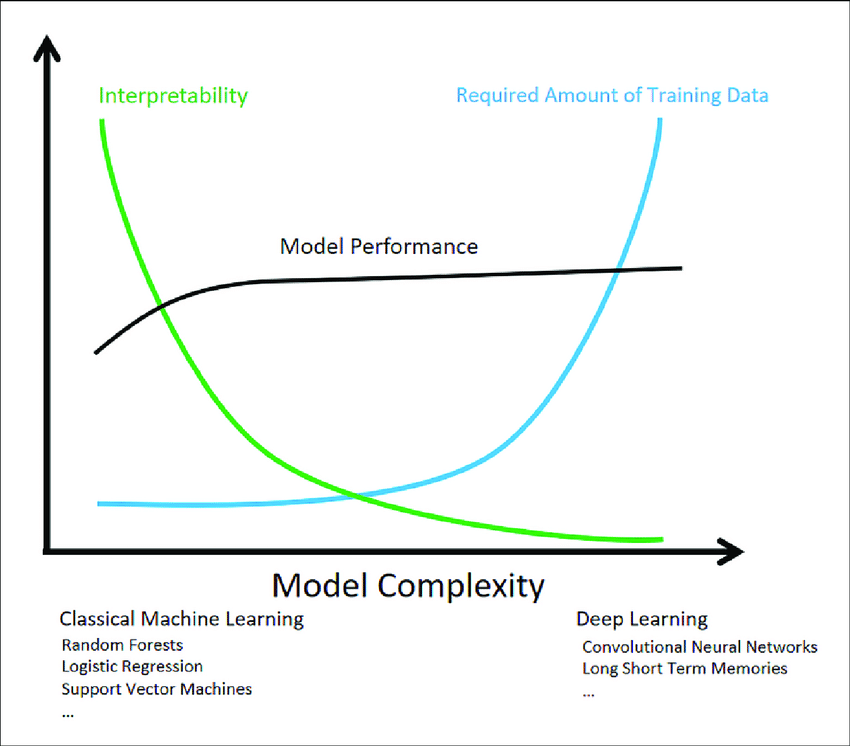

3. Model Complexity

Deep Learning Architectures

Deep learning has shown remarkable success, but complex neural architectures demand substantial computational resources. Models like convolutional neural networks (CNNs) for computer vision and recurrent neural networks (RNNs) for sequential data have become deeper and more intricate, requiring specialized hardware for efficient training and inference.

Transformer Models

Transformer models, particularly the attention mechanism, have revolutionized natural language processing. However, these models are massive and resource-intensive. Addressing their training and deployment complexities while maintaining performance is a crucial challenge.

Continual Learning

In many real-world scenarios, models need to adapt to changing data distributions over time. Continual learning aims to enable models to learn from new data without forgetting previously learned information. Handling catastrophic forgetting and ensuring model stability during continuous learning remains a research challenge.

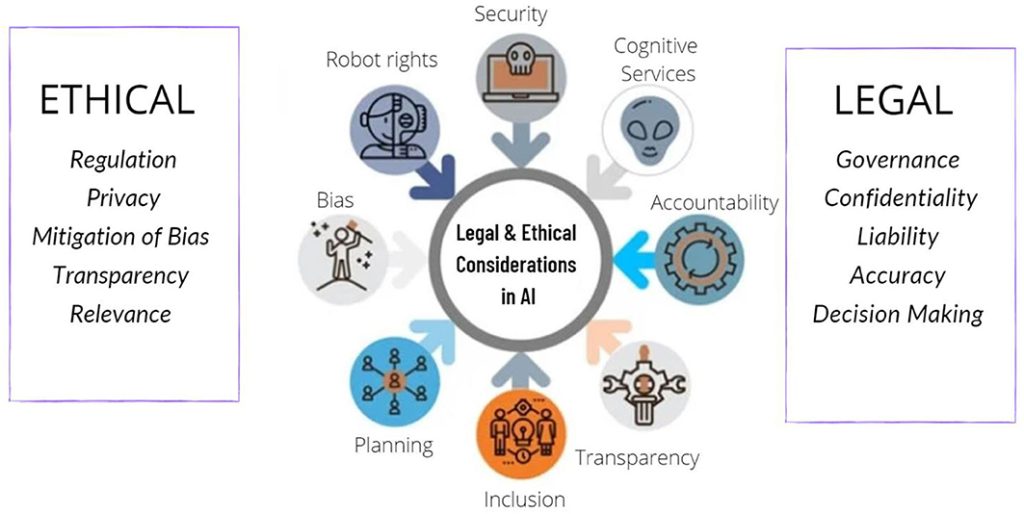

4. Ethical and Social Complexities

Bias and Fairness

As machine learning systems become deeply integrated into society, issues of bias and fairness have gained prominence. Ensuring that models are not perpetuating or amplifying existing biases in the data is a critical concern. Researchers are working on developing algorithms that mitigate these biases and provide fairness guarantees.

Explainability and Interpretability

Black-box models, while effective, lack transparency, making them difficult to trust and understand. Regulatory requirements and ethical considerations demand that models provide explanations for their decisions. Balancing accuracy with interpretability is a complex problem.

AI for Good

While AI technologies offer immense potential, they also raise ethical dilemmas. Ensuring that AI is used for positive social impact while minimizing negative consequences is a challenge that involves collaboration among technologists, policymakers, and society at large.

5. Hardware and Resource Complexities

Hardware Acceleration

Training deep and complex models requires powerful hardware. Graphics processing units (GPUs) and specialized hardware like TPUs have become essential for efficient training and inference. Optimizing algorithms for specific hardware architectures is an ongoing challenge.

Energy Efficiency

The carbon footprint of training large models has raised concerns. Researchers are working on techniques to make machine learning more energy-efficient, such as model pruning, quantization, and exploring alternative model architectures.

6. Conclusion

The machine learning landscape in 2023 presents an array of exciting opportunities alongside intricate challenges. As data continues to grow, models become more sophisticated, and AI’s impact on society deepens, researchers and practitioners must collaborate to navigate these complexities. By addressing data, model, ethical, and hardware-related challenges, the machine learning community can shape AI’s future for the better.

Thanks,

Leave a Reply

You must be logged in to post a comment.